This my first post in the UC-Lab Blog. I am studying at the University of Applied Sciences Hochschule Konstanz (HTWG) in Germany and I am writing my bachelor thesis within the Ubiquitous Computing Laboratory in cooperation with the University of Seville (ERASMUS program). My objective is to develop a Grid based system for data mining using Map-Reduce. In this blog I will docuIment my process of my bachelor thesis.

Here you can find more information about this and other projects of the UC-Lab: http://uc-lab.in.htwg-konstanz.de/ucl-projects.html

The system has to run on the Intel Galileo Gen 2 board (http://www.intel.com/content/www/us/en/do-it-yourself/galileo-maker-quark-board.html). Because of the limited resources of the boards, this is going to be one of the main aspects I have to focus on.

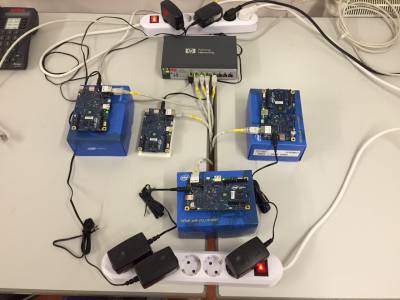

Figure 2 shows the setup of the four boards I develop on.

Figure 1: Setup of the Intel Galileo Gen 2 boards

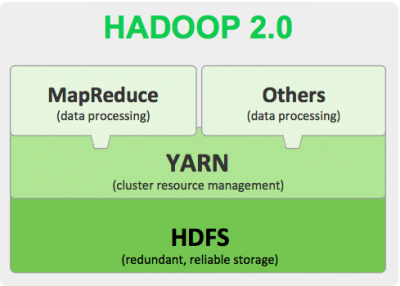

The Map-Reduce (MR) programming model with the Apache Hadoop framework is one of the most well-known and usually most common models. Specifically, it supports a simple programming model so that the end-user programmer only has to write the Map-Reduce tasks. However, Hadoop itself is a name for a federation of services, like HDFS, Hive, HBase, Map-Reduce, etc. (See Figure 2: Hadoop Architecture). Apache Storm and Apache Spark are distributed realtime computation systems and can be used with some of these services.

Figure 2: Hadoop Architecture

In a first field test, we have setup a Hadoop Cluster (v2.6) on five Intel Galileodevelopment boards. Due to the minimum resources (RAM, CPU) it was not possible to run the Hadoop system in an appropriate way. After infrastructural changes (Namenode & ResouceManager had been moved to regular workstation pc), the system provides a higher performance and usability. Simple Map-Reduce jobs (e.g. WordCount) as well as jobs with a higher complexity (Recommendation system) work, even for millions of data entries, with an acceptable performance.

Apache Storm (https://storm.apache.org) offers the opportunity to use it on Hadoop or to run it in a standalone mode. Storm is a real-time, streaming computational system. Storm is a online framework, meaning, in this sense, a service that interacts with a running application. In contrast to Map-Reduce, it receives small pieces of data as they are processed in your application. You define a DAG (Directed acyclic graph) of operations to perform on the data.

Storm doesn't have anything (necessarily) to do with persisting your data. Here, streaming is another way to say keeping the information you care about and throwing the rest away. In reality, you probably have a persistence layer in your application that has already recorded the data, and so this a good and justified separation of concerns.

At the moment I couldn't get Storm running on the Intel Galileo boards. The main problem is the operations system running on the boards. I used an existing Yocto image and found out, that there are some needed services missing. On the server, which is running with Debian OS, the setup was no problem. I think if I make my own Image with Yocto Project it should run on the boards too.

The third opportunity is Apache Spark (https://spark.apache.org). Just like Storm, Spark offers the opportunity to use it on Hadoop or to run it in a standalone mode.

One of the most interesting features of Spark is its smart use of memory. Map-Reduce has always worked primarily with data stored on disk. Spark, by contrast, can exploit the considerable amount of RAM that is spread across all the nodes in a cluster. It is smart about use of disk for overflow data and persistence. That gives Spark huge performance advantages for many workloads.

We were able to set up Spark over the cluster, but because of the limited RAM, we weren't able to run a job on the boards successfully. Maybe with some optimization of the use of the resources we could get it running.

My next Posts will contain a detailed tutorial how I set up the different frameworks and what is important to look out for.